- Knowledge Base

- SEO

- Advanced

Adding a robots.txt file to your website

The robots.txt file can allow or disallow crawlers - like Google's search bot. You can upload a robots.txt file in SiteManager.

In this article you'll learn how you can add a robots.txt file to your website. The robots.txt file can be used to (dis)allow crawlers to certain parts of your website. By default, a crawler will be able to access everything (except for pages with the noindex tag). For SEO reasons, it's considered a good practice to add a robots.txt file anyway.

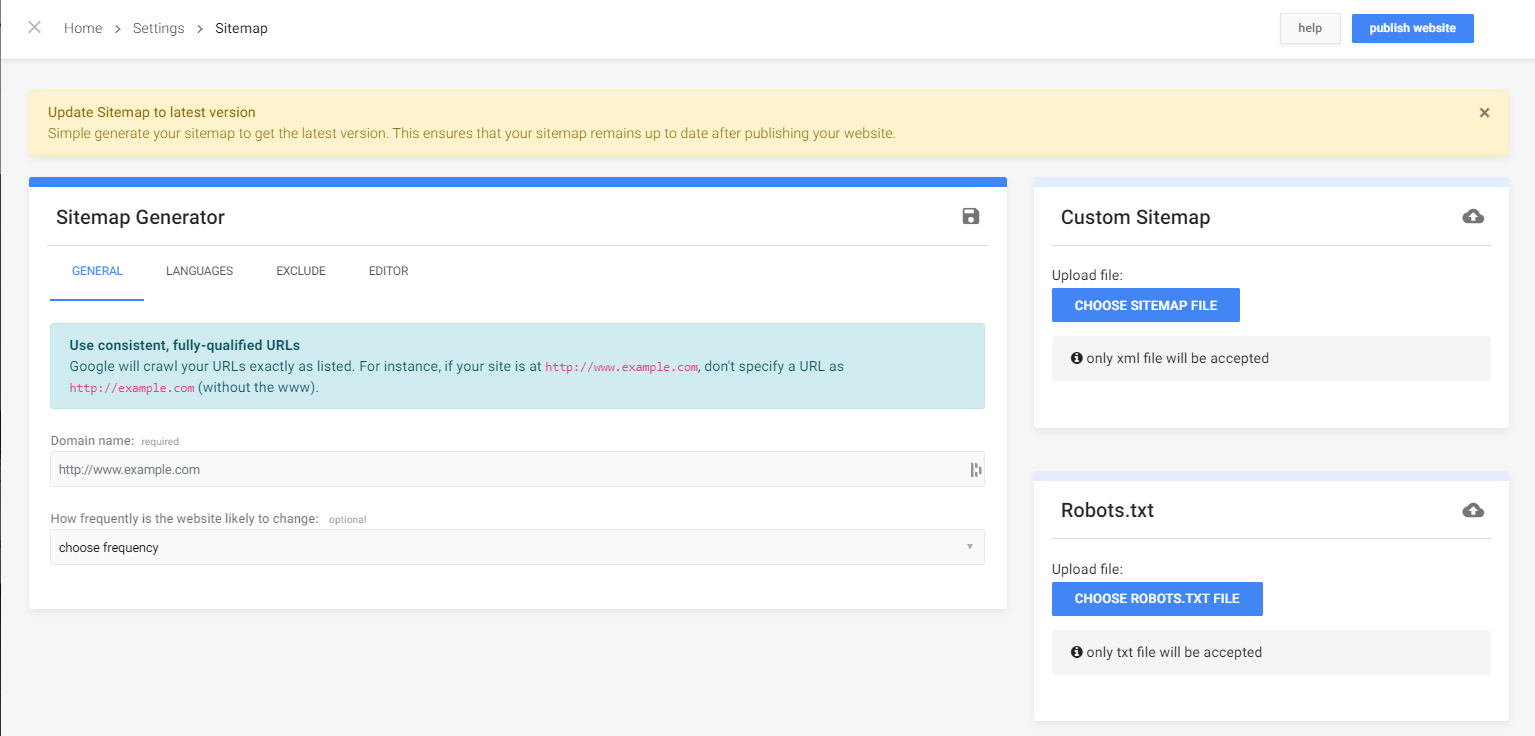

To upload the file, head to Project > SEO > Sitemap. This window will open:

In this window you can generate a sitemap (using the disk icon), upload a custom sitemap, or upload a robots.txt file.

Creating a robots.txt file

A robots.txt file is a TXT file which you can simply write using windows notepad. This file contains some rules for bots. To learn more about writing your own custom robots.txt file, read this article by Google.

If your goal is to optimize your website for SEO and allow all crawlers, you can upload a robots.txt file that allows everything:

User-agent: *

Disallow:

With this rule you disallow nothing, which means crawlers can look into your entire website. The asterisk (*) is used as a wildcard so the rule applies to any crawler.